Learning Programming

Early Stumbles and Wins

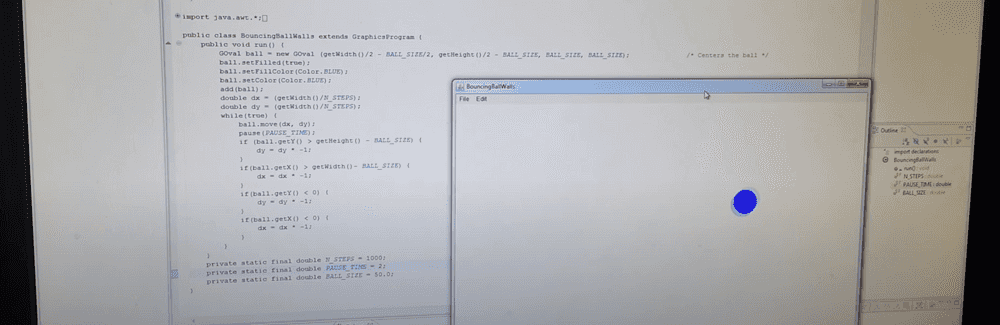

I first learned "programming" while in college. I took a class that was along the lines of "CS 101" - a basic, programming class. They used C... and Linux. I hated it and probably thought I'd never do that again. In the course, we learned common programming stuff - variables, loops, etc, and did esoteric things that didn't excite me. A few years later, I decided to try again on my own - I took a class on ITunes U called Programming Methodology. It was also an introductory course, but now it used a real IDE (with some autocomplete) - Eclipse and a "useful" programming language - Java. I did some esoteric stuff, but also made a ball bounce around a screen. I was so excited about that ball that it was one of the first videos I posted to YouTube. To me, this was a whole new level - of making the computer do something I care about.

Dark-IT Projects

Since I had a bit of software dev experience when I came to ChemPoint, I was always looking for opportunities to make apps to help with the team workflows. For example, I learned C#, .NET, and WPF (Windows Presentation Foundation) so that I could build a warehouse management app (previously, they were using a Shared Excel doc - where they had to announce to everyone when it needed to be updated, because everyone needed to close out of it!). This app used SQL Server and C#/WPF. We also constantly had freight selling competitions, so I built a freight quoting application - taking advantage of a couple of pricing APIs (smc3 and freightquote.com) to have some data to know whether our quotes were any good. I won a lot of competitions and was awarded by the company for my work - with a "Helium Award" - the second highest employee recognition award.

This also triggered my transition from the business into the Technology team. I worked as a Business Analyst and Systems Analyst for our "Online Experience" group - which was just a few people in charge of our publicly-facing websites, some internal websites, and marketing stuff. When we started, there was basically no marketing team and the site was... very old. Also, being on a small team with little additional support, we were on our own to figure things out and get stuff done.

C#, .NET, and SQL

The first big project that occurred right when I started was a redesign of the website and switching to using a content management system for managing the content (Kentico). So, I learned everything I could about the Kentico + ChemPoint.com website. This was a .NET Forms app hosted on-premises in a Windows Server machine, where the website was hosted using IIS (Internet Information Services). As the Systems Analyst (and general jack-of-all-trades), I was in charge of managing this website's hosting, configuration, and deployment.

Later, we updated this to use a "satellite website" architecture - where the website is separated from the CMS - in our case, the site was built in .NET MVC (model, view, controller pattern), written in C#. Any time a bug or question came up about the functionality of the website, I'd dig into the code and find out the answer. So, I took some classes on C#, MVC, etc... Similarly, whenever someone had a reporting need, in some cases I could get it from Kentico's UI, or I could query the database directly - which was way easier to re-run if someone needed it again in the future. So, I learned SQL (structure query language) - querying a Microsoft SQL Server database. Without any hesitation, I took a class on querying, managing, and structure of databases. These three things - .NET, C#, and SQL Server opened huge doors for me - allowing me to start making my own tools and doing some development on work projects. I learned .NET's Entity Framework around this time for those purposes.

Automated Build & Deploy (CI/CD)

We quickly realized the benefits of having multiple environments (dev, stage, and production) and testing off of your local machine as quickly as possible (ie: in dev), but building and deploying for each environment was a slow, manual process. So, I set up TeamCity as a free solution for building our .NET apps into dev (from TFS (Team Foundation Server source control) and deploying them to our Windows Servers. Eventually, when we moved to the cloud, we started using Octopus Deploy for the deployments from on-premises into the cloud. And then even more eventually, we used Azure DevOps for our code repos, on-prem deployments, and cloud deployments. I built the build and release pipelines for all of these platforms and managed deployments for all of our websites (2 internal sites and 5 external marketing sites - each with 3 environments).

SEO & The Cloud

As we continued to mature and push SEO (search engine optimization), we wanted to get out of an on-premises environment and into the cloud. So, I learned Azure, and set up an Azure App Service and Azure SQL Server for Kentico. Going even further, we also set up a CDN (content delivery network) using Azure's CDN. This made the site super fast from a server-perspective and was a better, faster experience for users globally.

Marketing

As the company built out a marketing team, I also got the chance to dig into the various platforms they were using. For example, I set up Google Ads for the first time - I took a class on Lynda.com (now Linkedin Learning). I also configured and developed our email platforms - making HTML emails. You'd think a web developer at this point would have no problem making emails, but wow... email is its own thing - with a million different browsers and devices to test. It was intense. I also learned a lot about spam, email deliverability, and device-specific features. I set up marketing campaigns in three systems - Salesforce ExactTarget (now Marketing Cloud), ClickDimensions (an extension for Dynamics CRM), and Marketo - which included setting up DNS records for email deliverability, developing landing pages, developing emails, and email templates. I also assisted significantly from a technology perspective during platform selection for various website/Martech/advertising tools - call tracking (Infinity Call Tracking), marketing campaign attribution (Bizible), ABM platforms (account based marketing), etc...

Data, Analytics, and APIs

During this time, there was a huge explosion in requests for data. I ended up using our Business Intelligence team's platform - Tibco Spotfire - for reporting on marketing-related things. The Business Intelligence team eventually migrated away from Spotfire, to Power BI, so I learned that new platform for reporting.

I also developed some desktop applications for connecting to third party data. For example, I made an app connecting to the Google Analytics API, to unlock additional capabilities and automation. This lead to me creating a platform (a .NET Core REST API) that allowed analysts to connect to all of our third party marketing platforms - Google Ads, Google Analytics, Bing Ads, LinkedIn Advertising, Google Search Console, Bing Webmaster Tools, Marketo, Infinity Call Tracking, Bizible, and Promoter.io. This significantly simplified access (obfuscating specific APIs and authentication). It is currently used to power data ingestion pipelines, bringing the data on-prem for reporting and for raw analyst consumption via R or Python. Ultimately, the API serves to report on most of the things our marketing team cares about - website statistics, email engagement, SEO information, advertising effectiveness, conversions, and NPS (net-promoter score).

Data Analysis Programming

With my skills in programming, SQL, and data visualization, I regularly came across a ton of information while reading industry news about data science and machine learning. So, my next step at ChemPoint was moving into "Business Intelligence" (now "Analytics") department as a data analyst. So, on my own, I took several classes to learn R, Python, Statistics, and Machine Learning to be the best possible data analyst. I started with R, and I really enjoyed how easy it was to process "data pipelines" using the Tidyverse (dplyr, ggplot2, tidyr, etc...). This made repeatable reporting so much easier - the ability to connect directly to a source, process it, and create an output Excel/csv. I eventually 'came to the light' and switched to Python - just because I had to go through a round of working with a Data Engineer to convert an R script to Python - and it was painful. It only needed to occur once. I also helped the analytics team develop standards and style guides for our Python - using Cookiecutter Data Science - which encouraged the team to develop little packages of python code rather than all the logic being inside. This lead

Machine Learning

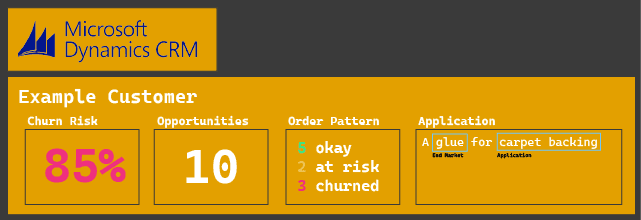

My first production ML project was predicting customer churn. We had an existing model that was kind-of half-baked, so I reviewed it, improved it, and presented a proposal to our leadership team - addressing some issues with the model and usability. It ended up being very important that was model was 'explainable', so I learned "LIME" and implemented that as part of that project. The project was accepted and I completed the project. I also set up the model training to run in Azure ML - so that it was mostly automated. However, the whole process was a very disjointed - the data would be moved to Azure SQL weekly, on Friday night. Then, on Saturday, the model training would run. And finally, on Sunday, we'd run a process to create some action items for users to follow up on in Microsoft Dynamics CRM. I've also developed models for **NLP **(natural language processing), using entity recognition techniques to process free-text fields in our CRM - creating more consumable data for reporting on trends. Also, continuing the thread with churn, I made a order pattern model - to let sellers know when a customer should be ordering, so they can follow-up if it's particularly late.

With these efforts, our salespeople are able to see a machine learning powered view of a customer, like this:

ML Ops

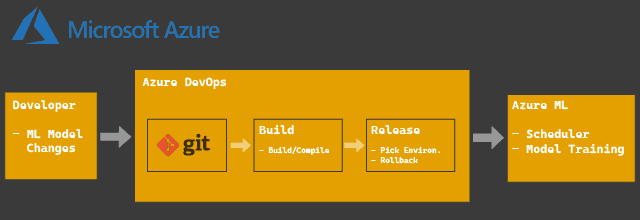

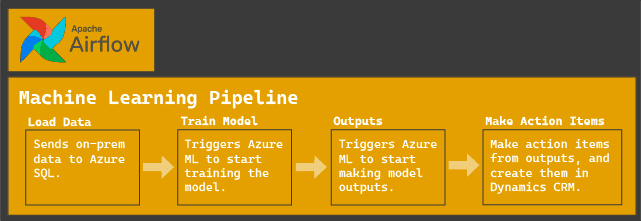

To make a proper pipeline, I created a proof-of-concept using Apache Airflow for this purpose. It was capable of running the various Python scripts on premises: move the data, start model training, generate the outputs, and create the action items - all the while confirming if the previous step actually completed without any errors and sending alerts when things failed.

We have this set up for 4 machine learning models right now, and this stack gives us an excellent, strong footing when considering future projects. It's relatively light effort to get everything set up when we have a new model.

As stated, I was heavily involved in developing an Analytics Team platform for analysis and data science - Python, Azure ML, Airflow, Cookiecutter, internal REST APIs, and an internal Python package for code reuse. With all the systems and processes, the only thing missing was more automation! We used Azure DevOps for user story management and I'd used it previously for website deployments. So, it is a natural extension to set up automated deployments for our ML projects and Airflow - which I did. Azure DevOps now automatically triggers our model training and output-creating code to be updated in our development environment whenever the code is changed. Additionally, we have release pipelines to promote the model to stage and production when it's been validated.